Coinciding as it does with heightened time indoors due to a global pandemic, the findings of Ofcom’s annual report on screen time habits Online Nation hold few surprises. But one, perhaps, is the extent to which time online proved to be a negative experience for UK children. The Internet may have been a vital lifeline for education and socialising during the pandemic, but it also increased children’s exposure to frightening and troubling online experiences, as Ofcom’s report shows.

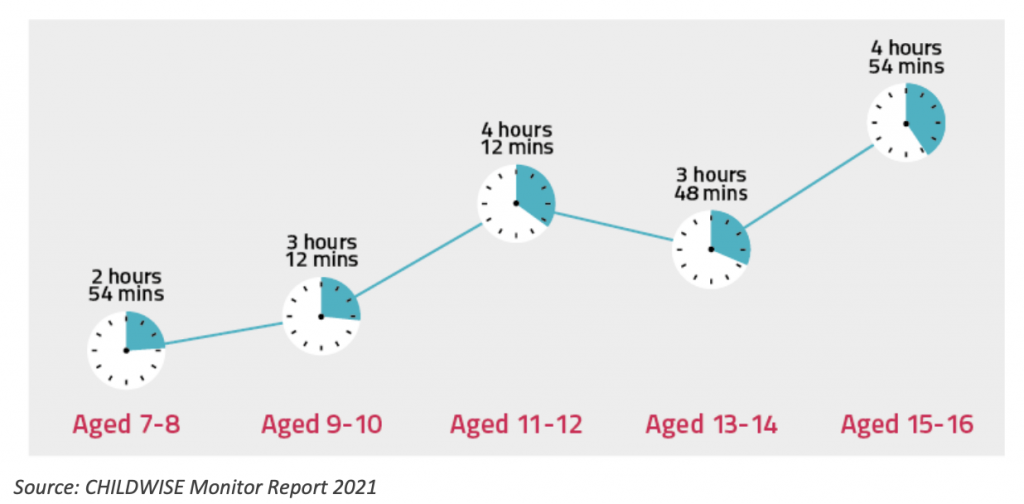

Unsurprisingly, over the course of 2020, Ofcom found that young people’s screen time was far greater than that of older generations. Even children as young as seven to eight years old were found to be spending an average of 2 hours 54 minutes a day online – a figure which almost doubled by the time they reached 15-16. Much of UK children’s screen time was reported to be spent watching video content and gaming, with YouTube being a constant in UK children’s online lives – used by nearly nine in ten children of all age groups, from three to four-year-olds to teenagers.

Despite an age limit of 13 being more-or-less standard across social platforms, the report found that two thirds of UK 11 year olds admitted to having a social media presence, with 16% per cent of 12 to 15-year-olds saying they knew how to ‘get round’ parental and other controls designed to stop them visiting certain sites, and 8% admitting to actively doing this.

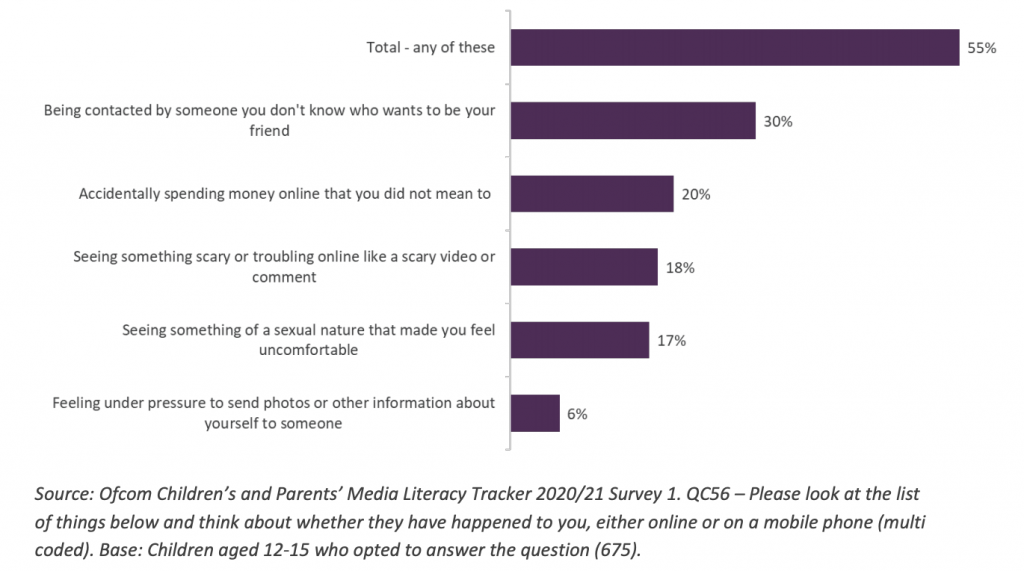

Perhaps connected to the fact that children increasingly know how to bypass online safety controls around their screen time, the biggest worry emerging from the report is the extent to which UK children report they have had worrying or troubling experiences online in the last year. Online Nation found that in 2020, 30% of 12-15 year olds were contacted by someone online they didn’t know who wanted to befriend them, and 17% of the same age group admitted to seeing something of a sexual nature online that made them feel uncomfortable.

18% said they had come across something scary or troubling online and 6% said they had felt under pressure to send photos or other information about themselves. Mental health worries associated with screen time were also reported by young children, with three quarters of girls aged 7-16 saying that “social media can make people worry about their body image”.

Preventing children from being able to access content which may trouble or scare them, and keeping them safe online from inappropriate contact from strangers, is a concern which parents have been battling with for decades now. Although the ability to block and report troubling content, or approaches, has improved across platforms it’s clear from the Ofcom report that the scale of the issue is vast – affecting as it does nearly half of all UK children in the past year alone.

The UK’s burgeoning ‘safety tech’ sector may provide some hope. In May 2020 the Department for Digital, Culture, Media and Sport (DCMS) report ‘Safer Technology, Safer Users: The UK as a world-leader in Safety Tech’, provided an overview of the UK’s growing online safety technology sector, and highlighted a range of innovative companies focused on tackling online harms through a varied range of technical solutions.

From working with law enforcement, to help trace, locate and facilitate the removal of illegal content online; to developing trusted online platforms that are age-appropriate; to verifying the age of users and actively identifying and responding to instances of online harm, bullying, harassment and abuse, these companies are developing technology to help manage and reduce online risks, with the shared aim of keeping users, especially younger users, safer online. Judging from the findings of Online Nation, this industry cannot come to maturity fast enough.